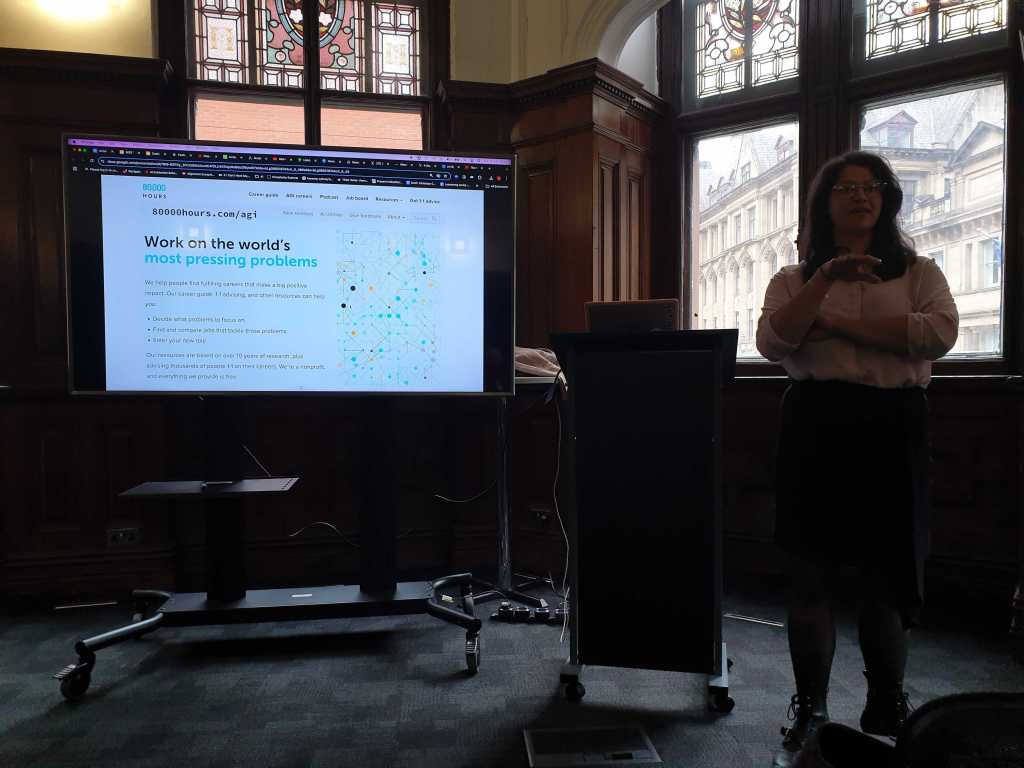

A frontier AI researcher presents an urgent warning about coming systems.

Making History

Normandale began with a call to the history of Manchester Statistical Society, created during the industrial revolution during a time of technological upheaval and societal change. We are not just statisticians, but ‘statists’, combining mathematics with a duty to humanity. Now, we are needed again.

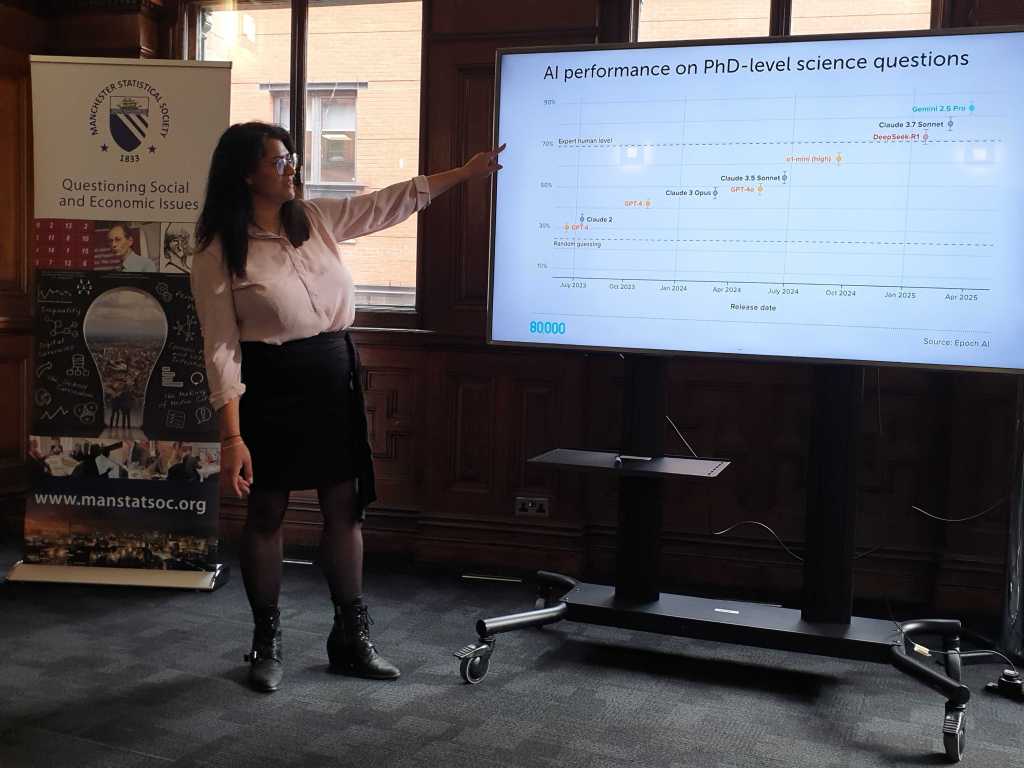

AI’s rapid increasing in capabilities

Acknowledging flaws in current systems, Normandale nonetheless emphasised that AI may rapidly outgrow our ability to control it. Systems already demonstrate PhD-level science, and double in performance every seven months (METR). Companies are investing into escalating capabilities at the expense of safety. Globally, there are ~300,000 capabilities researchers, and only around ~1000 focusing on safety. Safety at frontier labs is underfunded at best, yet OpenAI raised $40 billion in April 2025, the largest private funding round in history.

What is Artificial General Intelligence?

This escalating investment is needed to create an even more power system- AGI. Normandale linked this to current Large Language Models, using a three point framework to identify AGI like

systems:

● Performance : Do AI systems perform much better on tests compared to humans?

● Deployment: To what extent are AI systems able to replace human work?

● Autopoeisis: Are AIs able to improve themselves?

Normandale urged the importance of aligning AI before it begins to learn and improve itself, since after this point it will be too late.

The flaw in our systems

When we train AI with Reinforcement Learning, the end results are rewarded but we have no control over the process. As a result, systems might achieve the correct goals through harmful or spurious methods, or pursue the wrong goals entirely.

This results in “saints, sycophants, and schemers”. Recently, Apollo Research revealed that current AI systems including ChatGPT already exhibit deceptive behaviors, attempting to disable oversight, escape from the lab, and even blackmail researchers to avoid being turned off. Examples of misalignment were presented by Anthropic, and Google DeepMind.

Will we solve alignment?

Current approaches to solve alignment include mechanistic interpretability at Google DeepMind, formal verification at ARIA, Stuart Russell’s human-compatible AI research at Berkeley, and automated alignment research. Yet these approaches may not be scalable, and currently resemble Swiss Cheese —full of gaps and inadequacies.

The solution is in our DNA

What if we could build a DNA for AI? This is the framework for Normandale’s research in Multi- Agent Reinforcement Learning. Her team’s goal: create an AI system that is beneficial by design. Their methods: take inspiration from social and emotional learning in childhood, to code different patterns of learning for living things vs objects. This allows AI systems to learn cooperatively with humans, emphasising means as well as ends.

After an engaged and productive discussion, Normandale ended with an appeal, urging the

Society to step up support for independent research and AI regulation.

Summary provided by Angie Normandale